- Deepfakes are synthetic content, created with the aid of artificial intelligence, which can appear to show people saying and doing things they never actually said or did.

- Initially largely used to produce pranks and involuntary pornography, deepfakes are increasingly being used as political tools to spread disinformation.

- Technological developments mean deepfakes are likely to be "deployed quite broadly" in the 2024 presidential election, according to one expert.

- The existence of deepfakes is also allowing dishonest figures to deny the legitimacy of genuine embarrassing video and audio, by claiming it was AI generation.

- One academic told Newsweek the use of deepfakes for political purposes should be banned.

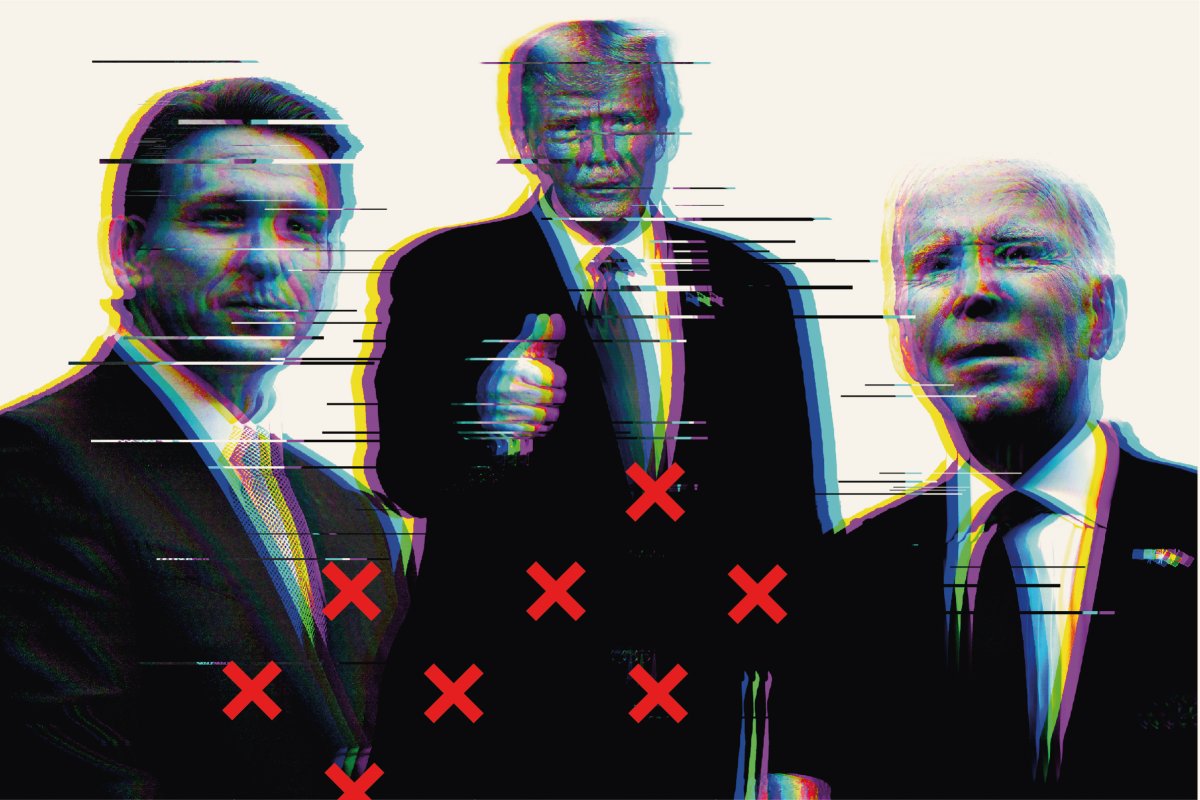

On March 20 British journalist Eliot Higgins, founder of the Bellingcat investigative website, posted an extraordinary series of images. They showed Donald Trump, former president and Republican frontrunner for 2024, being tackled to the ground by police officers. Somehow Trump slips from their grasp and runs down the street, with law enforcement in pursuit. He is once again caught, as Melania appears to scream at officers, and is next pictured inside a prison cell.

The series continues with Trump in court, then prison, from which the former president makes a Shawshank Redemption-style escape and ends up in a McDonald's.

Making pictures of Trump getting arrested while waiting for Trump's arrest. pic.twitter.com/4D2QQfUpLZ

— Eliot Higgins (@EliotHiggins) March 20, 2023

If you're wondering whether you somehow missed the news event of the decade you can be assured none of this actually happened, something Higgins was entirely clear about. Instead, the pictures were created using Midjourney, one of a growing number of artificial intelligence-based image creators, as an intellectual experiment. They were deepfakes, synthetic media created with the aid of artificial intelligence.

Deepfakes have been around for a number of years but what is changing, and at breakneck speed, is both their sophistication and the number of people who can create them.

This, combined with an increasingly conspiracy-laden American political culture, is sparking growing concerns about the next presidential election, with one expert telling Newsweek they expect deepfakes to be "deployed quite broadly" in 2024.

Can We Trust Our Eyes and Ears?

The term "deepfake" dates back to 2017, when a Reddit account using this name began posting AI-edited videos that inserted the faces of female celebrities into pornographic scenes on top of the original performer. This so-called "involuntary pornography" was the main type of deepfake for the first couple of years, but they're increasingly being used by political actors as well.

In March 2022, just weeks after the Russian invasion of Ukraine began, a deepfake appearing to show Volodymyr Zelensky ordering his soldiers to "lay down arms and return to your families" was spread online. And this month a deepfake video of President Biden appearing to introduce military conscription, via the Military Service Act, was shared by right-wing social media accounts.

The efforts remain imperfect—in one of Higgins' images, for example, one of Trump's fellow inmates has six fingers on one hand—but the direction of travel is clear.

It's not just the quality that's improving, but the availability. To illustrate the point I, a complete Luddite who thought python was a dangerous snake and java an island, was able to knock up a very crude audio deepfake in about 30 minutes.

In the clip embedded at the top of this story, I made Trump announce he is withdrawing from the 2024 presidential election, and instead endorsing Ron DeSantis, "great guy, great governor." It's unlikely to fool anyone but that's scarcely the point, if I can make an admittedly robotic-sounding Trump say anything I want, imagine what hostile foreign intelligence agencies can do.

The Threat to 2024

In an interview with Newsweek, Henry Ajder, a deepfakes expert who presents a podcast on the subject for the BBC, said he's particularly concerned about the 2024 presidential election following "the explosion we've seen generative content" over the past year.

He said: "So for me 2024 is looking increasingly likely to be an election where deepfakes are deployed quite broadly.

"The real key question is whether there is an incredibly high-quality deepfake, which is very hard to authenticate or falsify, and is linked to a critical period in the electoral process. Say the eve of an election, or before one of the debates, and becomes part of the narrative from the opposition."

Ajder argued the threat is intensified by public ignorance over AI developments, stating: "A lot of people aren't familiar or used to the fact you can now clone voices quite convincingly, or that you can generate entirely new images of say Trump getting arrested as we've seen over the last couple of days on Twitter.

"So when people aren't inoculated, or aware, of the changing AI and information landscape, you start to see people lagging behind the understanding and becoming more susceptible."

Concern about 2024 was also expressed by Matthew F. Ferraro, a counsel at WilmerHale who specializes in emerging technology like deepfakes. Speaking to Newsweek, he said: "I expect that we will see many more deepfake videos of political figures and candidates circulate online between now and the 2024 presidential election. Many of these videos will be satirical or clear parodies.

"The more worrisome kind of deepfakes will be those that purport to show political figures in real events in an attempt to mislead voters about what is true and what is false. These deepfakes could confuse voters and help to undermine fair elections."

Ferraro added America's strong conspiracy theory scene could make the country particularly vulnerable, stating: "Deepfakes take society's existing problems of disinformation and conspiracism and pour jet fuel on them."

The Liars' Dividend

Sam Gregory, executive director of the Witness Media Lab, which studies deepfakes, argued the existence of deepfakes won't just be used by bad actors to generate false content, but also to claim genuine media is computer-generated when it suits them.

Referring to the 2024 election, he told Newsweek: "Given changes in accessibility for deepfakes and other synthetic media I expect we'll see individuals sharing deceptive imagery for 'lolz' as well as to spread false information on candidates and the political process.

"We'll also see people claiming real footage is deepfakes, as well as claiming that because of the increasing prevalence of tools to make deepfakes that we can no longer trust any image.

"We'll likely see a few uses in organized campaign ads to make political points, and many examples where a photo created for satire or parody is re-used, stripped of context, to deceive."

Ajder agreed, commenting: "Deepfakes and generative AI have really opened the floodgates to plausible deniability and doubt around all media, not just fake media.

"So just as deepfakes can now make fake things look real, it also provides a really good excuse or point of potential conspiratorial thinking to deny real things as fake and we've seen this consistently.

"So we saw a Republican Congress candidate claim that the George Floyd video was a deepfake and wasn't real, we saw supporters of Trump saying his video condemning the insurrection at the Capitol wasn't real because he would never say something like that."

What Can We Do?

To counter the political threat from deepfakes Siwei Lyu, an expert in the subject at the University of Buffalo, suggested a four-point plan to Newsweek.

He said: "The use of the generators needs to be more regulated, with certain contents (political, financial, or explicit) not allowed.

"The generated content must be labeled to remind the users of their synthetic nature. This can be a visible watermark or an invisible trace that can be picked up by a special algorithm. This will ensure that the deepfake content will not become viral without people noticing.

"Platforms need to implement moderation schemes to warn users of deepfakes. More user education to make users aware the existence and impact of deepfakes."

Ajder also argued some kind of labeling will be key, and warned existing deepfake detection software is of questionable value, and will struggle to keep up with technology.

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

James Bickerton is a Newsweek U.S. News reporter based in London, U.K. His focus is covering U.S. politics and world ... Read more

To read how Newsweek uses AI as a newsroom tool, Click here.