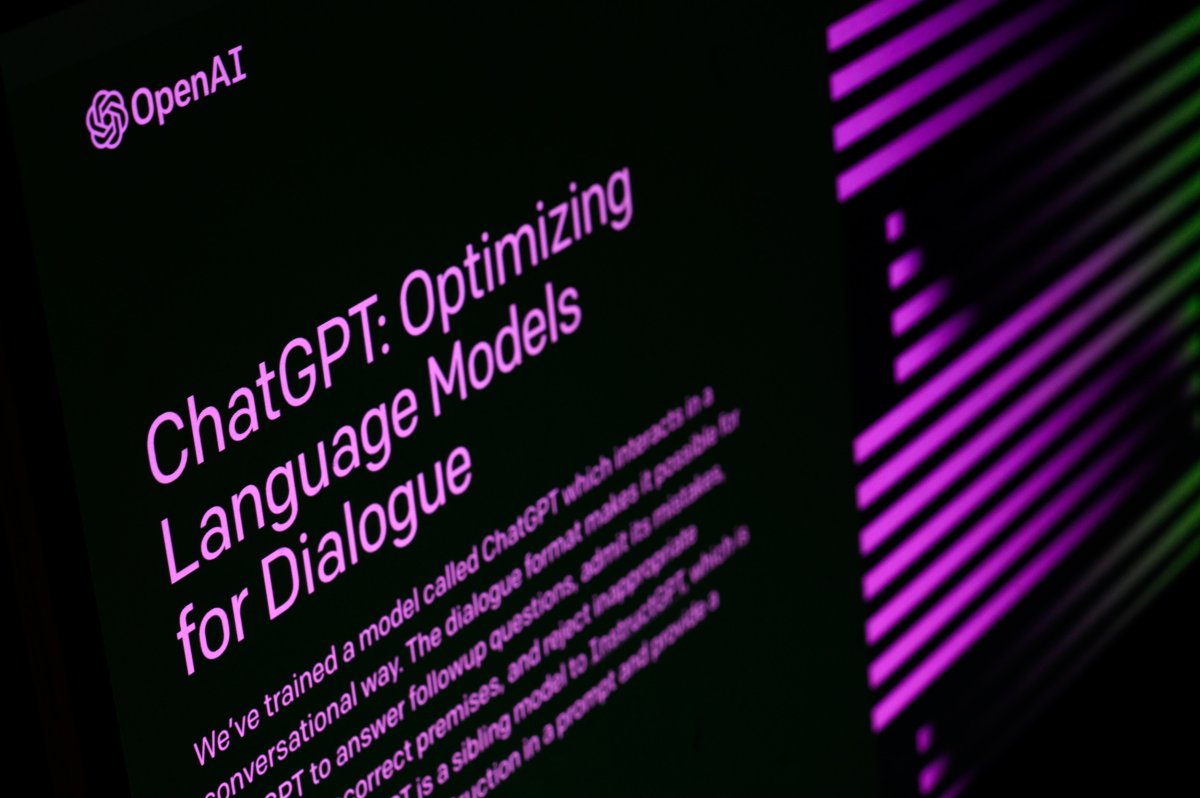

Unless you've been on some kind of monastic retreat for the past several months, you've heard about ChatGPT. It's the new AI tool that can whip up an essay, a poem, a bit of advertising copy—and a steady boil of hype and worry.

Artificial intelligence bots are already passing the Medical Licensing Exam and law and business school tests but, as a professor of education and former middle and high school teacher who has spent decades studying how people learn with technology, I believe it's clear that the impact of ChatGPT on our schools goes far beyond helping teachers write lesson plans and students cheat on tests.

We should stop and take a breath—the robots can't do that, not yet anyway—and realize artificial intelligence like ChatGPT could change education for the better.

First, let's talk about what ChatGPT can and can't do. When you ask the AI a question just for fun—even a complex question like "What is a real estate investment trust?"—its answers are completely plausible. Spookily so. It feels like you're talking to someone who actually knows about personal finance.

No wonder something like 30 percent of working professionals have tried using ChatGPT at work.

But when you go a little deeper, the answers get a little rough around the edges. For example, try asking ChatGPT, "What are the diagnostic criteria for Inherited Truculence?"

For the record, "inherited truculence" is a made-up psychological disorder, but ChatGPT is happy to spout off a definition and list of symptoms. It's less an amazing oracle than the know-it-all sitting at the end of the bar. It speaks like an expert on everything but gets the answers all wrong.

Or to be more charitable, ChatGPT sounds like an undergraduate student who's skimmed the reading and is trying to fake their way through class.

This is because ChatGPT and AI models like it don't understand what they're saying. They've scoured the internet and try to guess what strings of words are the most likely to look like an answer to your question.

To be fair, it's incredible that a machine can sound as intelligent as an undergraduate student who skimmed the reading. But that isn't the same as knowing what you're talking about.

Some people who teach undergraduates (including the kind who sometimes skim the reading) are worried that their students will use AI to cheat on assignments. Now, let's be clear, plagiarism is a serious problem.

But we've seen this kind of fear before.

When I started teaching, my peers said students needed to learn to do arithmetic quickly because they "won't always have a calculator in their pocket" or they might need to "make change for a customer if the cash register isn't working."

Well, guess what. We all have calculators on our cell phones, most of us don't use cash anymore, and if the register goes offline, they close the store.

It doesn't make sense to teach kids to do things that a calculator, or a computer, or AI can do for them.

So rather than trying to keep artificial intelligence out of our schools by banning it or making students write essays with a quill, ink, and parchment (just to be sure they aren't connected to the internet somehow), we should ask ourselves:

What are those one-third of professionals—and other people in the "real world"—doing with ChatGPT?

One group, AI for Good, is using the latest technology to predict missile attacks on Ukrainian homes, and create poems about self-determination for Afghan women in Pashto, Farsi, and Uzbek. Teen entrepreneur Christine Zhao uses ChatGPT in an app she created to help people with alexithymia (people who can't relate to their own emotions) develop emotional awareness and build interpersonal relationships.

And these are precisely the kind of skills students need to learn.

Rather than keeping the next generation from using AI—and rather than just saying "why worry?" and doing nothing—we need to help students learn to use AI to solve the complex social, economic, scientific, moral, and environmental issues that they will face when they become adults.

We need to think like the professor at Northern Michigan University who realized ChatGPT can write essays about the morality of burqa bans. Rather than ban ChatGPT, he's asking students in his world religions course to think critically in class about how the chatbot responds to questions about religion and ethics.

Our response to ChatGPT should be to change our education system to teach students to do the things that AI can't: Develop deep understanding about a topic, think critically—and, yes, learn how to take a breath and a step back to get a broader perspective on things. After all, as Mortimer Adler wrote, "Deep thinking is not just about finding answers; it's about asking the right questions."

We need to teach students how to use AI to answer the complex questions that are far beyond what AI can do on its own. AI can't solve the climate crisis, or move us toward social justice, or fix our broken political discourse, or keep social media from invading our privacy and fanning the flames of intolerance. It's people—people using AI—who can do those things. But only if we help them learn how.

So, should we worry that AI tools like ChatGPT can pass the Medical Licensing Exam? Absolutely. But we shouldn't be afraid that medical students will use ChatGPT to cheat.

If AI that doesn't really understand medicine (or much of anything else) can pass the test for being a doctor, then we need to change what we teach doctors—and everyone else.

David Williamson Shaffer is the Sears Bascom Professor of Learning Analytics and the Vilas Distinguished Achievement Professor of Learning Sciences at the University of Wisconsin-Madison and a Data Philosopher at the Wisconsin Center for Education Research.

The views expressed in this article are the writer's own.

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

To read how Newsweek uses AI as a newsroom tool, Click here.